最近有人提出能不能发一些大数据相关的知识,No problem ! 今天先从安装环境说起,搭建起自己的学习环境。

Hadoop的三种搭建方式以及使用环境:

单机版适合开发调试; 伪分布式适合模拟集群学习; 完全分布式适用生产环境。 先决条件 准备3台服务器 虚拟机、物理机、云上实例均可,本篇使用Openstack私有云里面的3个实例进行安装部署。

操作系统及软件版本 服务器 系统 内存 IP 规划 JDK HADOOP node1 Ubuntu 18.04.2 LTS 8G 10.101.18.21 master JDK 1.8.0_222 hadoop-3.2.1 node2 Ubuntu 18.04.2 LTS 8G 10.101.18.8 slave1 JDK 1.8.0_222 hadoop-3.2.1 node3 Ubuntu 18.04.2 LTS 8G 10.101.18.24 slave2 JDK 1.8.0_222 hadoop-3.2.1

三台机器安装JDK 因为Hadoop是用Java语言编写的,所以计算机上需要安装Java环境,我在这使用JDK 1.8.0_222(推荐使用Sun JDK)

安装命令

1 sudo apt install openjdk-8-jdk-headless

配置JAVA环境变量,在当前用户根目录下的.profile文件最下面加入以下内容:

1 2 3 export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64export PATH=$JAVA_HOME /bin:$PATH export CLASSPATH=.:$JAVA_HOME /lib/dt.jar:$JAVA_HOME /lib/tools.jar

使用source命令让立即生效

host配置 修改三台服务器的hosts文件

1 2 3 4 5 6 7 vim /etc/hosts 10.101.18.21 master 10.101.18.8 slave1 10.101.18.24 slave2

免密登陆配置 生产秘钥 master免密登录到slave中 1 2 3 ssh-copy-id -i ~/.ssh/id_rsa.pub master ssh-copy-id -i ~/.ssh/id_rsa.pub slave1 ssh-copy-id -i ~/.ssh/id_rsa.pub slave2

测试免密登陆 1 2 3 ssh master ssh slave1 ssh slave2

Hadoop搭建 我们先在Master节点下载Hadoop包,然后修改配置,随后复制到其他Slave节点稍作修改就可以了。

下载安装包,创建Hadoop目录 1 2 3 4 5 6 7 8 wget http://http://apache.claz.org/hadoop/common/hadoop-3.2.1//hadoop-3.2.1.tar.gz sudo tar -xzvf hadoop-3.2.1.tar.gz -C /usr/local sudo chown -R ubuntu:ubuntu hadoop-3.2.1.tar.gz sudo mv hadoop-3.2.1 hadoop

配置Master节点的Hadoop环境变量 和配置JDK环境变量一样,编辑用户目录下的.profile文件, 添加Hadoop环境变量:

1 2 3 export HADOOP_HOME=/usr/local /hadoopexport PATH=$PATH :$HADOOP_HOME /bin export HADOOP_CONF_DIR=$HADOOP_HOME /etc/hadoop

执行 source .profile 让立即生效

配置Master节点 Hadoop 的各个组件均用XML文件进行配置, 配置文件都放在 /usr/local/hadoop/etc/hadoop 目录中:

core-site.xml:配置通用属性,例如HDFS和MapReduce常用的I/O设置等 hdfs-site.xml:Hadoop守护进程配置,包括namenode、辅助namenode和datanode等 mapred-site.xml:MapReduce守护进程配置 yarn-site.xml:资源调度相关配置 a. 编辑core-site.xml文件,修改内容如下:

1 2 3 4 5 6 7 8 9 10 11 <configuration > <property > <name > hadoop.tmp.dir</name > <value > file:/usr/local/hadoop/tmp</value > <description > Abase for other temporary directories.</description > </property > <property > <name > fs.defaultFS</name > <value > hdfs://master:9000</value > </property > </configuration >

参数说明:

fs.defaultFS:默认文件系统,HDFS的客户端访问HDFS需要此参数 hadoop.tmp.dir:指定Hadoop数据存储的临时目录,其它目录会基于此路径, 建议设置到一个足够空间的地方,而不是默认的/tmp下 如没有配置hadoop.tmp.dir参数,系统使用默认的临时目录:/tmp/hadoo-hadoop。而这个目录在每次重启后都会被删除,必须重新执行format才行,否则会出错。

b. 编辑hdfs-site.xml,修改内容如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 <configuration > <property > <name > dfs.replication</name > <value > 3</value > </property > <property > <name > dfs.name.dir</name > <value > /usr/local/hadoop/hdfs/name</value > </property > <property > <name > dfs.data.dir</name > <value > /usr/local/hadoop/hdfs/data</value > </property > </configuration >

参数说明:

dfs.replication:数据块副本数 dfs.name.dir:指定namenode节点的文件存储目录 dfs.data.dir:指定datanode节点的文件存储目录 c. 编辑mapred-site.xml,修改内容如下:

1 2 3 4 5 6 7 8 9 10 <configuration > <property > <name > mapreduce.framework.name</name > <value > yarn</value > </property > <property > <name > mapreduce.application.classpath</name > <value > $HADOOP_HOME/share/hadoop/mapreduce/*:$HADOOP_HOME/share/hadoop/mapreduce/lib/*</value > </property > </configuration >

d. 编辑yarn-site.xml,修改内容如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 <configuration > <property > <name > yarn.nodemanager.aux-services</name > <value > mapreduce_shuffle</value > </property > <property > <name > yarn.resourcemanager.hostname</name > <value > master</value > </property > <property > <name > yarn.nodemanager.env-whitelist</name > <value > JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_HOME</value > </property > </configuration >

e. 编辑workers, 修改内容如下:

配置worker节点

配置Slave节点 将Master节点配置好的Hadoop打包,发送到其他两个节点:

1 2 3 4 5 tar -cxf hadoop.tar.gz /usr/local /hadoop scp hadoop.tar.gz ubuntu@slave1:~ scp hadoop.tar.gz ubuntu@slave2:~

在其他节点加压Hadoop包到/usr/local目录

1 sudo tar -xzvf hadoop.tar.gz -C /usr/local /

配置Slave1和Slaver2两个节点的Hadoop环境变量:

1 2 3 export HADOOP_HOME=/usr/local /hadoopexport PATH=$PATH :$HADOOP_HOME /bin export HADOOP_CONF_DIR=$HADOOP_HOME /etc/hadoop

启动集群 格式化HDFS文件系统 进入Master节点的Hadoop目录,执行一下操作:

1 bin /hadoop namenode -format

格式化namenode,第一次启动服务前执行的操作,以后不需要执行。

截取部分日志(看第5行 日志表示格式化成功):

1 2 3 4 5 6 7 8 9 2019 -11 -11 13 :34 :18 ,960 INFO util.GSet: VM type = 64 -bit2019 -11 -11 13 :34 :18 ,960 INFO util.GSet: 0.029999999329447746 % max memory 1.7 GB = 544.5 KB2019 -11 -11 13 :34 :18 ,961 INFO util.GSet: capacity = 2 ^16 = 65536 entries2019 -11 -11 13 :34 :18 ,994 INFO namenode.FSImage: Allocated new BlockPoolId: BP-2017092058 -10.101 .18 .21 -1573450458983 2019 -11 -11 13 :34 :19 ,010 INFO common.Storage: Storage directory /usr/local/hadoop/hdfs/name has been successfully formatted.2019 -11 -11 13 :34 :19 ,051 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/hadoop/hdfs/name/current/fsimage.ckpt_0000000000000000000 using no compression2019 -11 -11 13 :34 :19 ,186 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/hadoop/hdfs/name/current/fsimage.ckpt_0000000000000000000 of size 401 bytes saved in 0 seconds .2019 -11 -11 13 :34 :19 ,207 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 2019 -11 -11 13 :34 :19 ,214 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

启动Hadoop集群 启动过程遇到的问题与解决方案:

a. 错误:master: rcmd: socket: Permission denied

解决 :

执行 echo "ssh" > /etc/pdsh/rcmd_default

b. 错误:JAVA_HOME is not set and could not be found.

解决 :

修改三个节点的hadoop-env.sh,添加下面JAVA环境变量

1 export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

使用jps命令查看运行情况 Master节点执行输出:

1 2 3 4 19557 ResourceManager19914 Jps19291 SecondaryNameNode18959 NameNode

Slave节点执行输入:

1 2 3 18580 NodeManager18366 DataNode18703 Jps

查看Hadoop集群状态 查看结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 Configured Capacity: 41258442752 (38.42 GB) Present Capacity: 5170511872 (4.82 GB) DFS Remaining: 5170454528 (4.82 GB) DFS Used: 57344 (56 KB) DFS Used%: 0.00 % Replicated Blocks: Under replicated blocks: 0 Blocks with corrupt replicas: 0 Missing blocks: 0 Missing blocks (with replication factor 1 ) : 0 Low redundancy blocks with highest priority to recover: 0 Pending deletion blocks: 0 Erasure Coded Block Groups: Low redundancy block groups: 0 Block groups with corrupt internal blocks: 0 Missing block groups: 0 Low redundancy blocks with highest priority to recover: 0 Pending deletion blocks: 0 ------------------------------------------------- Live datanodes (2 ) : Name: 10.101.18.24:9866 (slave2) Hostname: slave2 Decommission Status : Normal Configured Capacity: 20629221376 (19.21 GB) DFS Used: 28672 (28 KB) Non DFS Used: 16919797760 (15.76 GB) DFS Remaining: 3692617728 (3.44 GB) DFS Used%: 0.00% DFS Remaining%: 17.90% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 1 Last contact: Mon Nov 11 15:00:27 CST 2019 Last Block Report: Mon Nov 11 14:05:48 CST 2019 Num of Blocks: 0 Name: 10.101.18.8:9866 (slave1) Hostname: slave1 Decommission Status : Normal Configured Capacity: 20629221376 (19.21 GB) DFS Used: 28672 (28 KB) Non DFS Used: 19134578688 (17.82 GB) DFS Remaining: 1477836800 (1.38 GB) DFS Used%: 0.00% DFS Remaining%: 7.16% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 1 Last contact: Mon Nov 11 15:00:24 CST 2019 Last Block Report: Mon Nov 11 13:53:57 CST 2019 Num of Blocks: 0

关闭Hadoop Web查看Hadoop集群状态 在浏览器输入 http://10.101.18.21:9870 ,结果如下:

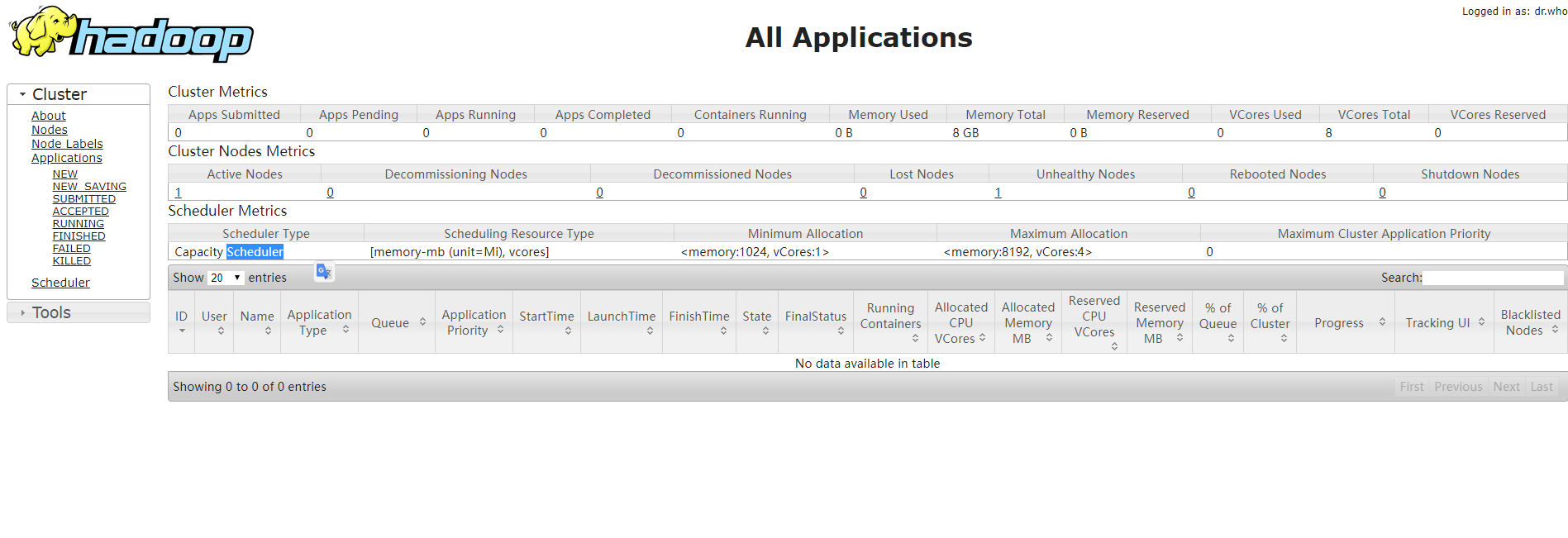

在浏览器输入 http://10.101.18.21:8088 ,结果如下: